plz 10

Web3

In case your Twitter feed has not succumbed to the Web3 hype wave, I will quote a reasonable definition from Slate:

Web3 refers to a potential new iteration of the internet that runs on public blockchains, the record-keeping technology best known for facilitating cryptocurrency transactions. The appeal of Web3 is that it is decentralized, so that instead of users accessing the internet through services mediated by the likes of Google, Apple, or Facebook, it’s the individuals themselves who own and control pieces of the internet. Web3 does not require “permission,” meaning that central authorities don’t dictate who uses what services, nor is there a need for “trust,” referring to the idea that an intermediary does not need to facilitate virtual transactions between two or more parties. Web3 theoretically protects user privacy better as well, because it’s these authorities and intermediaries that are doing most of the data collection.

Being as charitable as possible, I would describe the ultimate vision of Web3 advocates as an internet where networks (i.e. internet infrastructure) and applications (whether social media, financial, commerce, or otherwise) are distributed rather than centralized. This would have the critical outcome of making these networks and applications resilient to censorship and technical failure. Typically, these distributed (or decentralized) systems function by providing ownership to users via cryptocurrency tokens which align user incentives with the best outcomes for the networks and applications themselves.

That is a very interesting proposition! Certainly we have all learned that a centralized internet has its downsides. Large companies have lots of power to act unilaterally and individual users often have little recourse when they are dealt with unfairly. Our financial system is relatively slow to adapt to new technologies and industries, often taking years or decades to deliver meaningful improvements to customers. The infrastructure that powers internet businesses is highly centralized, meaning that a failure in one location can have broad impacts. These challenges are widely known; they are discussed regularly in popular media and impact the lives of many ordinary people. If we could somehow leverage distributed, cryptocurrency-based systems to address these issues then that would be an achievement indeed.

Perhaps most Web3 advocates are simply drawn to the prospect of realizing such an impactful vision. Unfortunately, there is another, simpler answer: there is money to be made. In the last few years, the cryptocurrency ecosystem has largely failed to improve its paltry abilities in the arenas of transaction speed, fee stability, and overall utility. The primary use cases of cryptocurrency remain money laundering, illicit transactions (i.e. drugs, sex trafficking, ransomware, etc.), and speculation. But a fourth use case has emerged, enabled by Ethereum: the easy issuance of arbitrary ‘coins’ which can be ostensibly tied to some future project, manipulated into relevance, and fueled by Ponzi-like mania to enrich the creators and early adopters at the expense of the greedy and the foolish. It seems to me that this new possibility for overnight riches and the hype cycle that has emerged from it are the fuel behind the Web3 fire — regardless of what lofty aspirations its proponents proffer.

It is easy to find cover for the issuance of a new token. In Web3, tokens are the mechanism by which users gain a stake in (and control of) the applications and platforms that they use. They are the very mechanism of freedom from the centralized structures that plague the internet today! But this is far from the on-the-ground reality. In the best cases, a token’s practical purpose is wild speculation. In the worst, fraudsters free from regulation can use every dishonest tactic available in an attempt to jumpstart the hype flywheel that can bring money pouring in. Market participants know all of this, but may participate anyway in a greater fool play to capture their own share of money. As long as more buyers are drawn in, the fact that a particular coin has no real utility or intrinsic value is no obstacle to a quick payday[0].

This Ponzi-like dynamic is so frequently observed that denial is not a viable strategy for Web4 advocates. Instead, the most recent attitude seems to be that all of this is actually a good thing! Dror Poleg recently published In Praise of Ponzis which makes the bizarre assertion that Ponzi dynamics are not only sustainable, but that these dynamics will underpin the future of the world economy:

What if there was a way to pay millions of people to watch a specific video at a specific moment in order to ensure that video goes viral and makes enough money to cover the cost of paying all these people — and then some?

In the old world, this would be too complicated. Just getting everyone’s bank details would take forever. But in our world, it is possible. It takes about five minutes to set up a smart contract that sends tokens to an unlimited number of people. The contract can be programmed to pay these people automatically once they complete a certain action online — and to pay them again when their actions bear fruit and drive up the value of a song/product/stock/anything.

This is, essentially, a pyramid scheme. A Ponzi. But it makes sense. It will be the dominant marketing method of the next decade and beyond.

I think the most charitable presentation of this concept is something like this: let’s say I create a new social network. I can fund the network by creating a new type of token that confers governance rights over the network and I can sell tokens to early adopters to fund the project[1]. If the network becomes very popular then the governance tokens will become more valuable and the early adopters will make money, incentivizing them to help grow the network. In reality there doesn’t need to be a project: users will voraciously pump any token they are invested in, hoping to draw in enough newcomers to make money. In classic Ponzi fashion, the newcomers have the same aspiration. The pretext of token utility via some future project ultimately adds no value and can be dispensed with.

The lofty aspirations of the Web3 community belie the application of tokens as, primarily, a vehicle for speculation and fraud. And even if someone is not directly perpetrating deception, there are certainly ethical questions to be asked of those making their money on the back of such an ecosystem. In almost comical fashion, Dror’s article is hawking a ‘Hype-Free Crypto’ course below the header and a merch line in the footer[2].

A Unified Theory of Mediocre Software

Software delivery at the turn of the century was not an especially great experience, as far as I can tell. To actually sell a product you had to send one version of your software to a manufacturer, print thousands of CD-ROMs, and wait for customers to go buy these physical copies at a retailer. In a lot of cases there was no way to update the software after it was shipped so picking the ‘final’ version was a big deal.

Nowadays things are much better; software updates can be provided easily over the internet and physical ‘prints’ of a piece of software are mostly non-existent. This has drastically lowered both the cost and importance of the moment a piece of software is ‘shipped’ and in fact there are entire philosophies based around deploying code as often as possible.

The ability to deliver features and fixes at almost arbitrary pace — as well as the general rise of the programming profession in status and compensation — has increased the options for dealing with software delivery deadlines. In the olden days, a delivery deadline (for, say, Excel 97) might have been nigh immovable. The product had to ship on a certain date, and that was that. If the team was behind schedule, they better start working nights and weekends! These days, so-called crunch is less common outside the game industry. Software teams can deal with deadlines by cutting project scope, safe in the knowledge that additional code can be deployed as soon as it is done.

As delivery moves closer to the ‘continuous’ end of the spectrum, the concept of a ‘deadline’ or ‘release’ becomes blurrier. “If we remove this one minor feature from the release’s scope,” you might say, “the product is still ready to be given to customers. After all, this feature is only [marginal UI polish/for power users/a quality-of-life improvement/a minor bug fix].” And you would be right! Like most complex things, software products can have a Ship of Theseus quality — many pieces can be removed before the fundamental nature of the thing is changed.

This is actually a good exercise, and something that high-functioning software teams do often. When your business is selling software, there can be good reasons to have deadlines. The software does not exist in a vacuum; it must serve the needs of customers who need to make their own plans for the future. But plans get delayed, and in the face of a slipping deadline the two options are typically to push the deadline or cut the scope (nowadays you can’t force all your programmers to work weekends for three months because they’ll just quit). Pushing deadlines (especially deadlines communicated to customers) is a hard pill for most businesses to swallow. Much better, it seems, to trim and pick at the edges of the product until the revised scope fits comfortably in the time available.

This is all healthy software development behavior! When a project is behind schedule, cutting the scope is often the least impactful option for the business, the programmers, and the customer. And thanks to modern tooling, anything you cut can be delivered as soon as it’s done.

So your project is behind schedule. You can take a large, high-priority feature and subdivide it into five smaller tasks. Three of the tasks are the core functionality of the feature and they’ll still be high-priority, but the other two aren’t strictly necessary so they can be marked as low-priority and moved out of scope for the launch. You repeat this process a bit, time passes, and you deliver software at your deadline that meets the revised scope. The customers get their promised features, the business meets its obligations, and everyone gets promoted, woohoo!

Now you’d like to go back and finish those low-priority tasks that you cut out of the original scope, but the customer wants more features! And the customer is the one paying the business money, so their feature requests are definitely high-priority. You’ve got to scope these new features and get back to the customer with a target delivery date! And now you see the issue: any work cut from the original launch (because it wasn’t important enough) must now compete against the newest, shiniest objectives of the team. Not only is this battle unwinnable, but the same process will likely repeat — the backlog grows monotonically.

I think this basic pattern is responsible for most of the generally mediocre software currently in vogue, especially enterprise software. If you’re using a tool that is clunky, poorly integrated in its ecosystem, or missing obvious helpful features, it is probably a result of the incentives and processes outlined above.

There doesn’t seem to be a great way to set up organizational incentives to prevent this problem. I think Apple successfully fended it off (at least for a while), partly by having Steve Jobs micromanage lots of people and be a tremendous asshole. Prevention does probably fall to leadership — likely director-level or above at medium and large organizations. Otherwise, the benefits of ‘product excellence’ are too remote to compete with concrete business objectives.

It also might be the case that truly making excellent products is not actually good business, that there is a diminishing marginal return to some work that makes it simply a waste past a certain point. If that’s the conclusion of the market then I’m disappointed. But I think it’s more likely that this is just a case of second and third-order effects being inaccurately discounted by the incentive structures of companies. The difficulty is not actually building great products, but building organizations that treat that as a high priority.

Hockey Tickets

If you want to go to a home game for a popular NHL team there are limited options for getting tickets. Most of the available ticket inventory will be from season ticket holders reselling their tickets. These tickets are often expensive since the holders would like to recoup some of the money they spent on buying the season tickets. As game time gets closer, it would make sense for ticket sellers to lower their prices to ensure a sale (since if they don’t sell at all then they get no benefit). It would also make sense for the ticket market to be fairly efficient if inventory was large enough. At any time (and for any quality of ticket) there is some market-clearing price which lowers as puck drop approaches. But this is all based on inference and anecdote. Is it possible to get data on the hockey ticket market to understand how it functions?

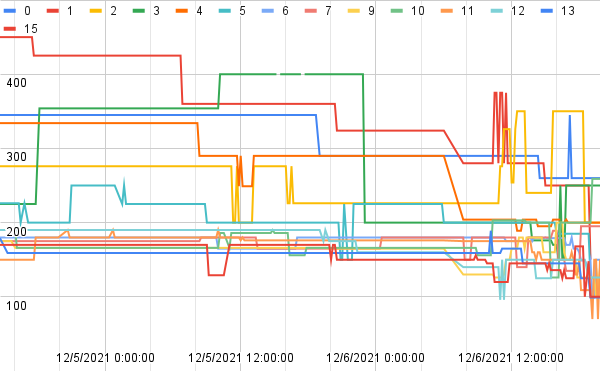

By a truly unbelievable coincidence, I was recently out for a walk when I saw a small package fall off a truck ahead of me. Inside was a USB drive with time series data of ticket availability for several recent Seattle Kraken home games! While there is not much correlation of absolute prices between games, general volume and price reduction patterns are consistent. For example, plotting the cheapest ticket by row at a particular time confirms several intuitive guesses:

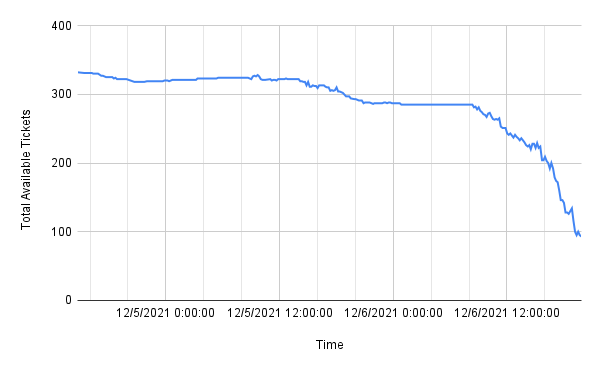

Tickets get cheaper as a game approaches, but most price movement happens the day of the game. Pricing is also not uniform: cheaper than average tickets occasionally appear but are quickly bought — look at the areas where a new line dips below all the others. Observing the total volume of available tickets by time shows when buying happens:

Fascinating!

plz In Review

So, this has been fun! I opened plz 1 with “I have thought for a while that I should write more” and that’s pretty much what I did. All told, these ten issues amount to about 25k words which is a satisfying number for me. My original thought was kind of like ‘I’ll do for Hacker News what Money Stuff does for finance news’ because it seemed like a natural fit, but as time went on I think I shifted toward general topical writing and personal opinions and away from timely news analysis and explanation. Maybe that’s because I don’t read as much tech news as I thought I did. Regardless, I found it challenging to identify news that was interesting to write about.

Another (perhaps related) recurring issue was that my feeling of ‘this would make a good newsletter section’ was inversely correlated with things I knew a lot about. That might just be a bad heuristic — if you already know something it can seem boring to you but be interesting to people who don’t know it — but I really tried to avoid being boring, probably at the expense of more writing volume. On the flip side I also tried to target topics where I was either already an expert or I felt the topic was too vague to make ignorant analysis mistakes. This was probably also not optimal for improvement but at least my ego is intact! Oh well.

I think plz 4 is the strongest issue overall and also has the best single section in ‘Google v. Oracle’. That section nails my original goal of making a timely news story with technical underpinnings legible to a wide audience and I think both the tone and pace are excellent. There is also a well-timed humorous link to incredibly niche content which I think is essential. I am also proud of plz 3 (aka ‘the Bitcoin one’) because it crystallized a series of opinions I’ve long had but never fully articulated.

Anyway, that’s all for now. Thanks for reading!

Bookmarks

Web3? I have my DAOts. Beware isolated demands for rigor. Navigating equality and utility.

[0] All the problems with tokens apply equally to NFTs — in fact, it’s a meaningless semantic distinction. The ongoing NFT hype is easily explained by these exact dynamics, further underscoring the irrelevance of common objections (“yOU DOn’T acTualLy oWn THE aRT!”).

[1] Or fund my new vacation home after I abscond with the money. Could go either way!

[2] I am also super not a fan of the idea that we should commodify every human act and interaction. This seems obvious to me, but that disturbing theme appears in Dror’s piece as well as The Pareto Funtier so I feel obliged to note it. But that’s sort of a separate cultural issue so it’s relegated to this footnote.